Securing the AI Application Stack

See how attackers target your AI systems

Protect models, prompts, plugins, and data with runtime validation, signing, licensing, and provenance.

The problem legacy tools create

AI apps face new risks: prompt injection, plugin abuse, vector DB leaks.

Missing signing, licensing, and provenance controls.

Our approach solves the problems

1

Management

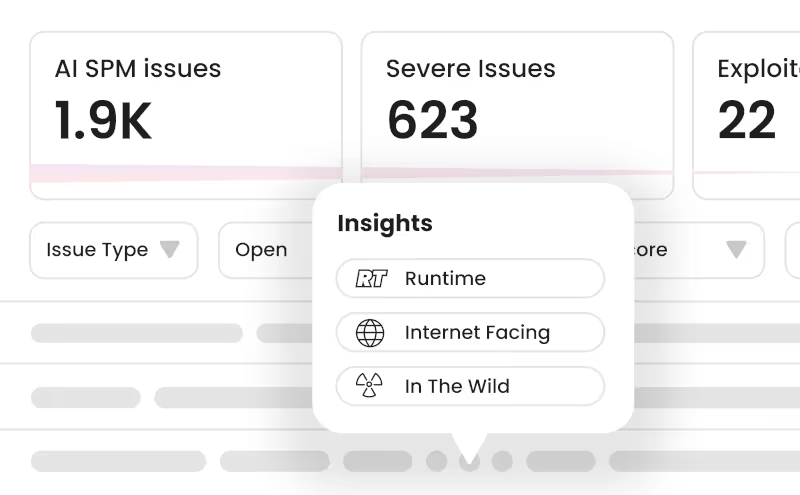

Posture management for all AI artifacts (models, code editors, prompts, plugins, DBs)

2

LLM and AI Code Editor vulnerability detection

Detects injections, RCEs, data leakage, and DoS

3

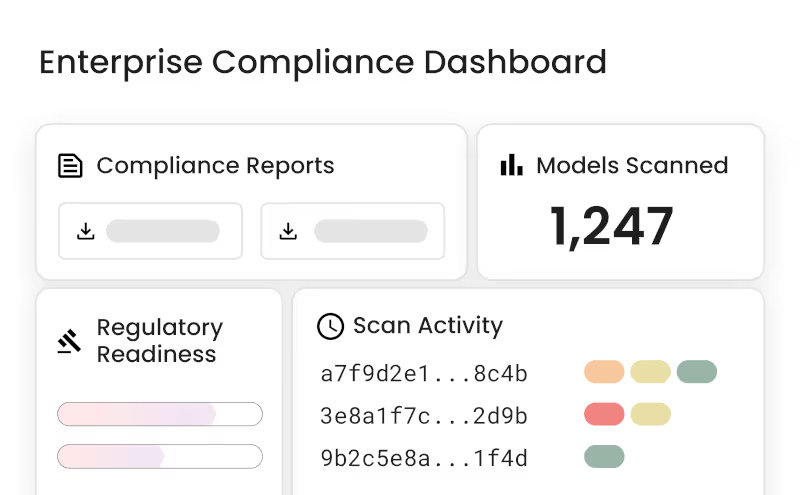

AI supply chain security

AI BOMs, signing, license checks, provenance

4

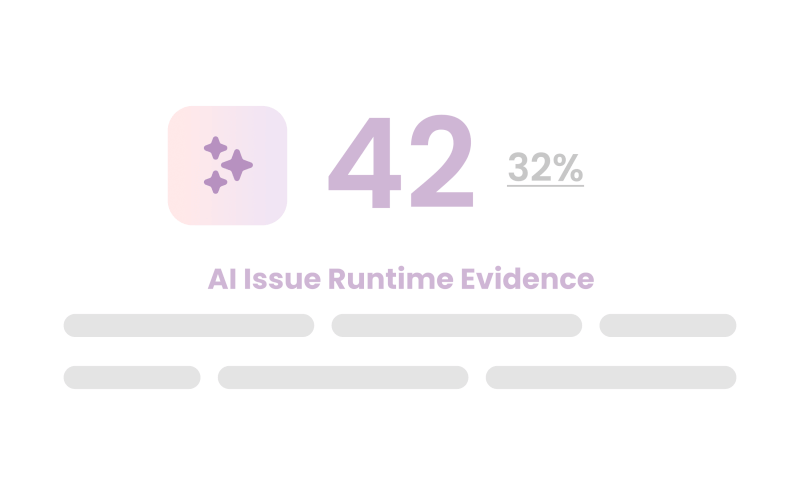

Runtime validation

Confirmation of model-plugin call sequence

Trusted by

How Kodem helped

A summarization model was integrated without a signature or license metadata.

Kodem generated an AI BOM, flagged missing provenance, and blocked deployment until validated.

Ensure 100% of deployed models are verified and licensed

Prevent AI-specific exploit classes

Provide audit-ready AI BOMs for ISO 42001 and AI governance

"Kai saved our engineers time, 10x’d our team, and gave us visibility we never had."